Explanation

From my experience the problem often occurs on SQL statements where there is a column in the WHERE clause with very different amounts of data. Below is an example of a simple table call dbo.customer. We have a big difference in the amount of records between the cities values.

City Count

Halmstad 1000000

Kiruna 1

London 100

Paris 10

To get the

data the SQL engine chooses a different approach, if it is a low selectivity or

a high one. The city named Kiruna, use a Key Lookup, but for Halmstad a Clustered

Index Scan is the best choice.

SELECT

* FROM dbo.customer WHERE City = 'Kiruna'

SELECT * FROM dbo.customer WHERE City = 'Halmstad'

If we

create a stored procedure for this SELECT statement instead, we will probably

see issues with parameter sniffing.

CREATE

PROCEDURE USP_GetCustomerByCity @City VARCHAR(50)

AS

SELECT

* FROM dbo.customer WHERE City = @City

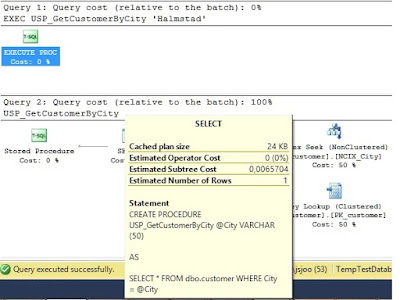

If

we now run this stored procedure with the high selectivity value for city Kiruna

it will be compiled with this particular SELECT statements query plan, which

use a Key Lookup. Then if we change and run the procedure with the low

selectivity value for the City Halmstad it still compile with the first value.

This even if a Clustered Index Scan would perform better. You can also see that

SQL assume it is one row with this value, see “Estimated Number of Rows”.

If the SQL

had run a Clustered Index Scan instead of a Key Lookup, which it should have

done with correct compilation, we should have seen a dramatic difference in the

reads, as you can see from the output from Statistics IO. First with a

Clustered Index Scan and second with a Key Lookup.

Table

'customer'. Scan count 1, logical reads 7316

Table

'customer'. Scan count 1, logical reads 3002730

Find the problematic stored procedures

So how is

it possible to troubleshoot this and find the stored procedures who perform badly?

Unfortunately, there is no place just to check this in an easy way in SQL.

In one approach to try finding out which

procedure suffers or should possible suffer from parameter sniffing; we are

interested in queries with big difference in the logical reads. In the DMV sys.dm_exec_query_stats

SQL collects information about queries. This DMV has the information from all

the queries in the cache. How many times they have executed, amount of rows

returned and so on. In this case, we can get use of the MIN and MAX logical

reads done for our query. Let us run the statement below.

SELECT

qs.execution_count,

SUBSTRING(qt.text,qs.statement_start_offset/2

+1,

(CASE WHEN qs.statement_end_offset =

-1

THEN

LEN(CONVERT(nvarchar(max), qt.text)) * 2

ELSE qs.statement_end_offset end

-

qs.statement_start_offset

)/2

) AS query_text,

qs.last_logical_reads,

qs.max_logical_reads,

qs.min_logical_reads,

qs.total_logical_reads

FROM

sys.dm_exec_query_stats AS

qs

CROSS

APPLY sys.dm_exec_sql_text(qs.sql_handle) AS qt

WHERE

qt.text like '%USP_GetCustomerByCity%'

ORDER

BY qs.execution_count

DESC;

This returns the information about this

examples particular procedure, and it says it has been executed seven times,

and the Max_logical_read is 3002734 compared to the Min_logical_read that is 6.

It is quite a big difference, and it reflects the information I received by

Statistics IO quite good, when I did run the SELECT statements outside the

procedure in the first run.

Workarounds

One way to solve the problem, as I have find myself

doing often, is to run EXEC

SP_UPDATESTATS. Probably not the best way to solve it, but it

often works since it also triggers a recompile of stored procedures. Often you

are in hurry and not sure if maybe the statistics is bad, which is a common

problem as well. However, a correct solution would of course be to code the

procedure correctly instead. There are a few ways to do it, as described in short

here.

Create the

procedure with the option “WITH RECOMPILE”

CREATE PROCEDURE

[dbo].[USP_GetCustomerByCity] @City VARCHAR(50)

WITH

RECOMPILE

AS

SELECT

* FROM dbo.customer WHERE City = @City;

Create the

procedure with the option “OPTIMIZE FOR” as the example below. I think this is

the best approach in newer versions of SQL.

CREATE PROCEDURE

[dbo].[USP_GetCustomerByCity] @City VARCHAR(50)

AS

SELECT

* FROM dbo.customer WHERE City = @City

OPTION

(OPTIMIZE FOR

(@City =

'Halmstad'))

This option

can be useful when you can´t change the procedure. By create a Plan Guide for

the procedure you can set the options there instead of changing the procedure.

If the parameter values are known while compiling the stored procedure,

the optimizer uses the statistics histogram and generate the best plan for the

parameters. When we define local variables and use that in the query, the SQL

server will not be able use the parameter values to find the optimal value. In

this scenario, the optimizer uses density vector information of the statistics,

and it will generate the same execution plan for all input parameters.

CREATE PROCEDURE

[dbo].[USP_GetCustomerByCity] @City VARCHAR(50)

AS

DECLARE

@LocalVariable VARCHAR(50) = @City

SELECT

* FROM dbo.customer WHERE City = @LocalVariable

Conclusion

Even if this is a quite common problem, I see

that many developers are not aware about this and how SQL works. To find the

problem procedures you have to dig into the plan_cache, and it is quite

difficult to find the problem. I think and hope the query_store in SQL 2016 can

be useful to trouble shoot this kind of problem. I will try it out when it is

more available.